Floating-point (ir)reproducibility¶

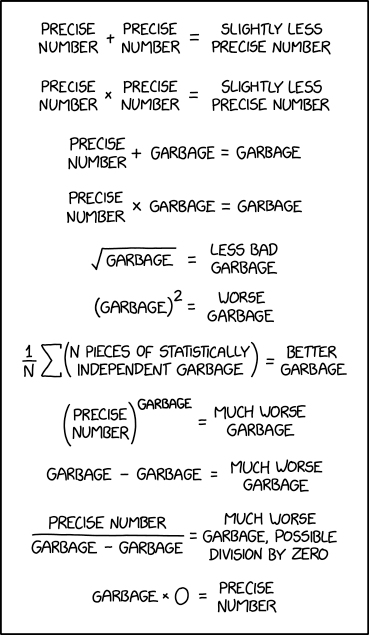

I'm going to show that even if you have identical data, software and OS, you still can't guarantee reproducibility across instances, at least when it comes to floating-point calculations. XKCD sums it up quite nicely:

Any software developer who writes numerical code needs to have some understanding of how floating-point numbers are represented, because they are a major source of software bugs and issues. Some people have a tendency to either assume the computer can't possibly get simple maths (slightly) wrong, or dismiss floating-point issues as rounding errors. Firstly, consider this motivating example of a very simple floating-point expression:

Python 3.8.5 (default, Jul 28 2020, 12:59:40)

[GCC 9.3.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> 0.5-0.4-0.1

-2.7755575615628914e-17

Feel free to try this in your language/platform of choice, chances are you will get the same wrong answer. So why can't a computer get such a simple sum correct?

So you can assume it's a small and systematic (i.e easily reproducible) error and live with it, but if (for example) you have derivatives traders screaming at you because their risk numbers are all over the place and they can't hedge their positions, you can't just accept it. The problem is, floating-point errors don't always stay small, and they aren't always easily reproducible.

I strongly recommend reading What Every Computer Scientist Should Know About Floating-Point Arithmetic. Now I'll try to explain the non-zero answer above.

The IEEE754 Standard¶

Here we will only consider the double precision format, as it's by far the most widely used. Single, half and quad precision all suffer from the same issues. Double-precision floating-point numbers are represented in 64 bits as follows:

- a sign bit

s, with zero representing positive and 1 negative. - an 11-bit exponent

e - a 52-bit mantissa

m

The value 1 looks like this in binary:

In other words,

The exponent bits need some explanation. It is biased by 1023, in other words a bit value of 1023 represents an exponent of zero. It also has two special values that are interpreted differently, namely 0 (subnormals) and 2047 (infinity and NaN), which we won't cover here. A value of 1 represents an exponent of \(2^{-1022}\) or approximately \(2.23\times10^{-308}\) and a value of 2046 is \(2^{1023}\) or approximately \(8.99\times10^{307}\). Thus the format can represent number over a range of well over 600 decimal orders of magnitude.

The mantissa is interpreted as a binary number in the range \(\big[1,2\big)\), with the leading 1 implied. The bits thus represent the fraction after the decimal point. This is why double precision is often referred to as having 53 bits of precision when the length of the mantissa is actually 52. Something of a moot point when one of those bits is fixed.

The fact the mantissa is binary means that only rational numbers that have a denominator that's a power of two can be represented in a finite number of digits. This contrasts with base 10, which can represent rational numbers with a denominator that's a combination of powers of 2 and 5, since these are the prime factors of 10.

So going back to the example above, we can see that neither the exact decimals \(0.4 = 2/5\) nor \(0.1 = 1/(2\times5)\) can be represented exactly in binary, but \(0.5=1/2\) can.

Here are the bit representations of the numbers in our expression:

| n | bits |

|---|---|

| 0.5 | \(\boxed{0}\boxed{01111111110}\boxed{0000000000000000000000000000000000000000000000000000}\) |

| 0.4 | \(\boxed{0}\boxed{01111111101}\boxed{1001100110011001100110011001100110011001100110011010}\) |

| 0.1 | \(\boxed{1}\boxed{01111111011}\boxed{1001100110011001100110011001100110011001100110011010}\) |

What's interesting is that for the second two, only the exponent is different (by 2). The mantissas are identical, and this makes perfect sense when you consider that 0.4 is exactly 4 times 0.1. So if we reorder the operations,

Python 3.8.5 (default, Jul 28 2020, 12:59:40)

[GCC 9.3.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> 0.5-(0.4+0.1)

0.0

we get the right answer. If you're that interested you can do it manually by first shifting the mantissa bits of 0.1 to the right 2 places and then add the two numbers (don't forget the implicit leading 1s) - the rounding errors cancel (to 52 bit precision) and you get exactly 0.5.

Sadly this kind of intervention - reordering the expression based on the values - isn't going be a very practical generic solution, but it gives some insight and understanding of what's going on under the hood.

Reproducibility¶

Algorithms¶

Typical operations that are "numerically unstable" include, but are in no way limited to

- computing a running sum of numbers - as the running total gets larger, so do the rounding errors. See Kahan summation for a solution to this.

- finite differencing - dividing small differences between numbers by another small number

- matrix inversions/solvers - for the reasons above

Platform¶

Ok, so you understand the limitations of floating point, but surely identical versions of your code will give the same (perhaps slightly wrong) answer wherever you run it? Wrong.

Firstly the platform (i.e. OS) has an impact. This is typically due to the runtime libraries that your code relies on, and for numeric reproducibility we're talking about the maths library (e.g. libm.so) and specifically how transcendental functions such as exp, log, etc are implemented. The IEEE754 standard doesn't (to my knowledge) mandate for full 52-bit accuracy (due to the performance tradeoff?) and so their accuracy is typically not guaranteed to be the full 52-bit width of the mantissa. This means that different implementations can give slightly different results. And even a difference in the least significant bit of the result can easily bubble up into a much larger error. I've seen it happen many times.

Hardware¶

Basic arithmetic operations aren't performed by a runtime library, they are implemented extremely efficiently in hardware. Traditionally (on x86) this was done by a x87 floating point coprocessor but is now largely done using vectorised SIMD (single-instruction-multiple-data) instructions. Things to note:

- the x87 uses an 80-bit internal representation of a double and thus can perform more accurate (internal) computations.

- SIMD floating-point instructions can operate simultaneously on multiple values but do not have the extra precision of the x87.

- SIMD implementations have gone from a 16-byte vector width (e.g. SSE2) to 32 (e.g. AVX) and even 64 (AVX512) on some hardware platforms.

- Newer SIMD implementations contain hardware implementations of some transcendental functions (e.g.

exp,sqrt), as well as reciprocals and fused multiply-add operations.

The wider the vector width you use, the faster your code will run, typically. Many maths libraries (e.g. Intel's MKL) contain multiple implementations of algorithms optimised for different architectures and will detect what hardware support is available, and use the best available, unless you explicitly force it to use a specific SIMD architecture.

So there's potentially a hardware-software interaction that you may not be aware of.

I'm going to replicate the behaviour of such a library using this C++ code that computes the dot product of a large vector:

#include <vector>

#include <algorithm>

#include <iostream>

#include <iomanip>

double dot(const double* a, const double* b, size_t n)

{

double x = 0.0;

// tell the compiler to unroll this loop if it can

#pragma omp simd reduction(+:x)

for (size_t i = 0; i < n; ++i)

{

x += a[i] * b[i];

}

return x/n;

}

int main()

{

size_t size = 512 * 1024;

double h = 1.0 / size;

std::vector<double> a;

size_t i = 0;

// a values decreasing linearly from 1 to zero

std::generate_n(std::back_inserter(a), size, [&]{ return 1.0-i++*h; });

// compute the inner product to self and display the result to full precision

double x0 = dot(a.data(), a.data(), size);

std::cout << std::setprecision(16) << x0 << std::endl;

}

The C++ compiler handily allows us to control what floating point instructions to use, so I can mimic the behaviour of a third-party maths library that selects the architecture at runtime:

#!/bin/bash

# use the non-vectorised floating-point unit

g++ -O3 -g src/float.cpp -mfpmath=387 -o bin/float-387 && echo -n x87:; bin/float-387

# use 2 double width vector instructions

g++ -O3 -g src/float.cpp -fopenmp -msse4.2 -o bin/float-sse4.2 && echo -n SSE4.2:; bin/float-sse4.2

# use 4 double width vector instructions

g++ -O3 -g src/float.cpp -fopenmp -mavx2 -o bin/float-avx2 && echo -n AVX2:; bin/float-avx2

Here's the output:

x87:0.3333342870082561

SSE4.2:0.3333342870067009

AVX2:0.3333342870071102

Why the differences¶

This code is simply multiplying and adding. The problem really comes from the adding: as the running sum gets larger, successively adding small values involves greater and greater rounding errors. In the vectorised versions, the loop is unrolled and two or four running sums are maintained (which are themselves summed at the end) so the order of addition is different, and thus rounding errors are different. The differences are admittedly small, but if these were intermediate results, they could easily propagate to large differences in the end result.

Interestingly, whilst the x87 implementation is potentially the most accurate (due to it's 80-bit internals) it's actually the least accurate because it's single running sum is prone to more rounding errors.

True story¶

The "screaming derivatives trader" was real (ok slightly exaggerated), but we were sometimes getting nonsensical risk numbers from a valuation model that was a nonlinear optimisation (requiring matrix inversions) of an objective function that was itself a Monte-Carlo simulation, and sensitivities calculated by finite-differencing results collated from various nodes on a large compute grid. And the point, as pointed out by XKCD, is that small errors in algorithms like these can accumulate into large ones in the results.

Eventually, we managed to reproduce the problem and pinpoint two specific machines on the grid that consistently reproduced the problem. Trouble was, not only were they both running identical versions of the software, they were both running the same OS, and they even had identical hardware. The only difference was that one was virtualised, the other "bare metal".

We were already (painfully) aware that hardware with different SIMD instruction sets could give different results, and that our grid had a range of cores of varying ages, and that we were using a highly optimised third party maths library that would use the best-available SIMD instructions on the hardware, unless otherwise directed, and I thought I had already solved this by forcing the library it to only use SIMD instructions that all of our cores supported (SSSE3 if I recall correctly).

It turned out that the virtualisation layer was incorrectly reporting what SIMD architecture was on the machine, and so our (actually my) code didn't think it needed to force the issue. But when the code ran, it wasn't using the same SIMD implementation as the rest of the grid, and the risk numbers blew up as a result. Actually fixing this was trivial, but getting to this point - where we knew what on earth was going wrong - took a teeny bit more effort!

In summary¶

- Achieving exact reproducibility in floating-point computations is hard.

- Reproducibility issues can arise from any of the software, the operating system, or even the hardware, depending on the way your software interacts with it.

- Using SIMD can give you big floating-point performance improvements but at the expense of not getting exactly the same answers. Often, you may not even know what CPU-level instructions you are using, and tracking down the source of differences can be tricky, so it pays to be aware of potential issues.

- Containers (docker) and virtual machines are no less as susceptible: whilst they allow you to ensure the software is exactly reproducible, you still have no control over what hardware they are run on.