How (not) to supply data¶

If you provide packaged, versioned, well-documented software as part of your academic output, then you make your work much more easily reproducible (see my previous post). However, if that software needs some input data to do its thing, then you also need to apply the same principles to the data: somebody with a different dataset wont be able to produce your results, despite having identical software.

This applies whether you are providing the data yourself, or if you are using data from a third-party source. If the latter, you of course cite all your data sources.

Data is some way behind the curve compared to software: alarm bells would immediately ring if one of your software dependencies was completely unversioned, perhaps not even under source control, and yet data is all-too-often like this. The FAIR principles were established/published in 2016, and in summary state that data should be Findable, Accessible, Interoperable, and Reusable.

Data providers can only anticipate how consumers use their data, and can be slow to change from older/traditional formats which may be less suitable for modern use-cases, be they software-led (raw data) or human-led (visualisation). This inevitably leads to some preprocessing being required, which itself needs to be reproducible. It's common to download a spreadsheet, copy and paste some data into a new spreadsheet, apply some formulas, change some names, then save it as a csv file. This is completely opaque and if any misinterpretations or errors creep in then they are hidden: it needs to be transparent.

Here's a fairly simple example...

Population estimates¶

Let's say we need population projections by for England by local authority, sex and (5-year) age group. We'd like it in some non-proprietary format that we could load straight into (e.g.) python or R and do something with it. A csv file will do just fine for this.

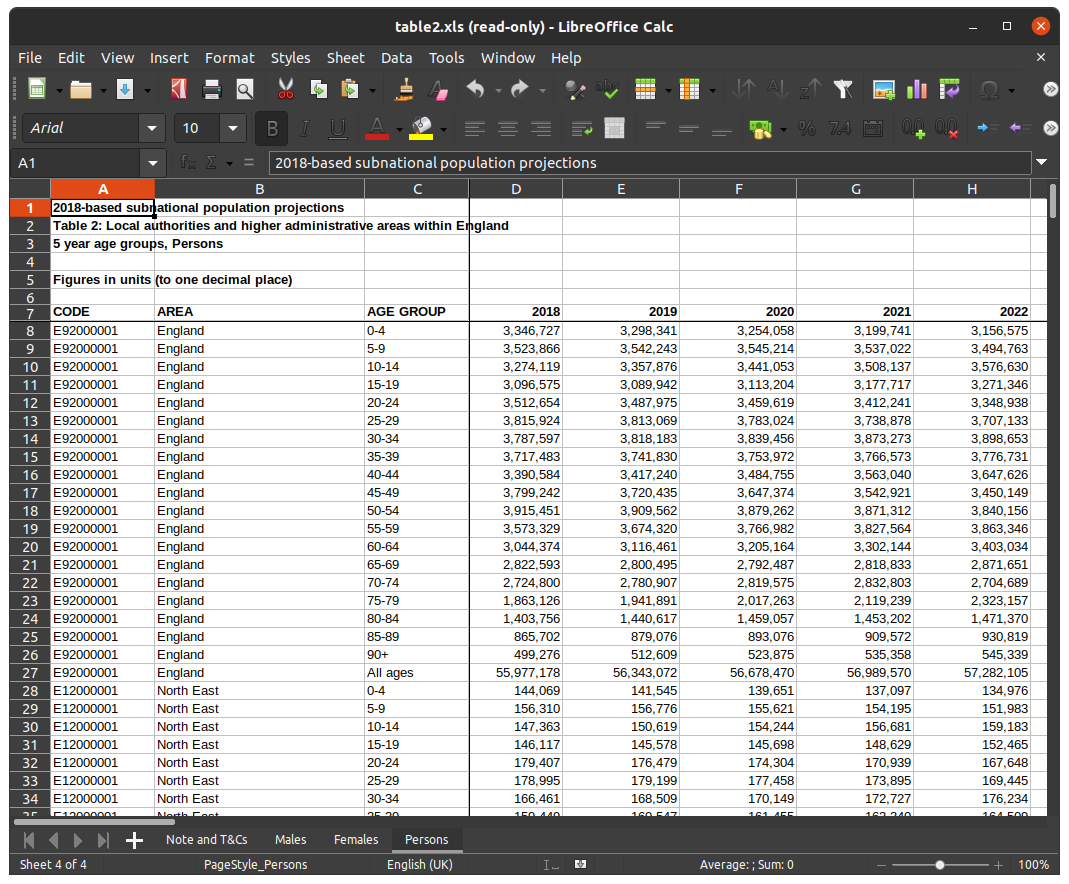

ONS produce this data, and here's the 2018 numbers from their website. It's an Excel spreadsheet (despite some very high-profile Excel-related data blunders):

Firstly, as data goes this isn't particularly big, unstructured, or dirty data. It's pretty straightforward, but the question that springs to my mind is: what audience is this format aimed at? It's got all sorts of features like multiple tabs, frozen rows, formatted text, comma-number separators than suggest it's been designed for humans to pore over. Really? But who gets insight directly from huge tables of numbers, surely they'd visualise it more graphically in some way? It seems to be in some no-man's land between user-friendliness and process-friendliness. I wouldn't give it a very high rating against the FAIR principles.

To get the exact dataset I want I could do the following:

- delete the first six rows of the Males worksheet

- delete all the rows where the AGE GROUP column value is "All ages", otherwise I'll double-count the numbers

- delete all the rows where the AREA column value is a country, a region or a county, to avoid more double-counting

- create a new column called SEX and fill it with (e.g.) "M"

- remove the comma-separator formatting from the numbers and the "freeze columns" setting

- copy the remaining contents into a new worksheet

- repeat all the steps above in the Females worksheet, but adding (e.g.) "F" in the new SEX column

- copy this to the new sheet, appending the male data

- save the new worksheet as a csv file

Despite this data not being particularly messy or unstructured, I have to go though some fairly tedious, manual and error-prone steps to get the data exactly as I need it. And if I'm using this data to generate some published results, do I really want people to have to repeat these steps to replicate my work? I could detail the manual preprocessing steps in the text, but it detracts somewhat from the flow of the paper. Another possibility is I could publish the preprocessed data and reference it in my paper. But if it transpires there is an error in the original data, which gets corrected, or updated, then neither I nor my readers get the correction.

Perhaps a better solution would be to automate the steps I took, including downloading the data, and packages like pandas (for python) are perfect for this, although there's a still a few hoops to jump though:

import pandas as pd

import requests

from pathlib import Path

def download(url):

# reading url directly with pd.read_excel(url) gives 403

# but using requests works fine (must be user-agent string)

response = requests.get(url)

if response.status_code != 200:

print("%d error")

return None

return response.content

which is a generic function I could use purely for fetching just about any raw dataset from a URL. Then I need another function, specific to this dataset, to actually process the data:

def preprocess(raw_data, output_file):

dataset = pd.DataFrame()

for tab in ["Males", "Females"]:

data = pd.read_excel(raw_data, sheet_name=tab, header=6)

# remove duplicate age counts

data = data[data["AGE GROUP"] != "All ages"]

# remove non-LAD counts

data = data[data["CODE"].str.match(r'^E0[6789]*')]

# add sex column

data["SEX"] = tab[0]

# append data

dataset = dataset.append(data)

# cache data

dataset.to_csv(output_file, index=False)

and putting this together, we code some logic that efficiently caches the dataset after initially downloading and preprocessing, then returns a DataFrame:

# 2018 SNPP 5y

url = "https://www.ons.gov.uk/file?uri=%2fpeoplepopulationandcommunity%2fpopulationandmigration%2fpopulationprojections%2fdatasets%2flocalauthoritiesinenglandtable2%2f2018based/table2.xls"

cached_data = Path("./england_snpp_2018.csv")

if not cached_data.is_file():

preprocess(download(url), cached_data)

dataset = pd.read_csv(cached_data)

print(dataset.head())

which produces

CODE AREA AGE GROUP 2018 2019 2020 2021 2022 2023 2024 ... 2035 2036 2037 2038 2039 2040 2041 2042 2043 SEX

0 E06000047 County Durham 0-4 13849 13526.5 13343.5 13121.8 12976.9 12939.8 12930.1 ... 13351.0 13453.3 13561.5 13672.8 13785.2 13894.1 13992.6 14075.1 14137.3 M

1 E06000047 County Durham 5-9 15443 15626.6 15524.8 15315.7 15085.3 14677.6 14335.6 ... 13665.4 13707.3 13764.3 13837.1 13921.7 14015.8 14119.1 14229.4 14344.3 M

2 E06000047 County Durham 10-14 14610 14997.3 15386.8 15795.2 15967.3 16157.0 16320.8 ... 14227.7 14206.5 14206.9 14219.8 14234.1 14259.6 14298.2 14352.6 14423.7 M

3 E06000047 County Durham 15-19 15022 14936.7 15001.9 15290.5 15812.4 16310.0 16692.7 ... 16627.9 16339.9 16130.7 15999.2 15975.2 15930.3 15898.4 15891.0 15898.2 M

4 E06000047 County Durham 20-24 18599 18613.4 18657.8 18538.9 18394.5 18291.3 18241.3 ... 21551.0 21395.9 21109.8 20755.1 20381.3 20095.5 19787.6 19558.4 19415.6 M

[5 rows x 30 columns]

Which is great, I can just ensure that this code is in the repo that I cite in my work.

APIs¶

Many data providers provide an API, which allows users to directly query a data provider to get the specific subset they require from a large data, and often allows for customisable output formats.

A good example of this is NomisWeb, which provide UK population (particularly census) data from various sources, including ONS data. Their website provides a "wizard" allowing users to construct a query to get a specific dataset. For large datasets (e.g. census data), this is far more efficient than downloading a bulk dataset then filtering for the (small) specific subset you want.

Using the Nomisweb query builder I can construct this url:

https://www.nomisweb.co.uk/api/v01/dataset/NM_31_1.data.tsv?geography=1811939329...1811939332,1811939334...1811939336,1811939338...1811939497,1811939499...1811939501,1811939503,1811939505...1811939507,1811939509...1811939517,1811939519,1811939520,1811939524...1811939570,1811939575...1811939599,1811939601...1811939628,1811939630...1811939634,1811939636...1811939647,1811939649,1811939655...1811939664,1811939667...1811939680,1811939682,1811939683,1811939685,1811939687...1811939704,1811939707,1811939708,1811939710,1811939712...1811939717,1811939719,1811939720,1811939722...1811939730,1811939757...1811939767&date=latestMINUS1&sex=5,6&age=1...19&measures=20100

which gives me a very similar dataset to the one in the previous section, and I can then amend my code to download (and potentially cache) the data as and when I need it. I can also get rid of the preprocessing code, which simplifies things.

Data persistence¶

The trouble is that neither of the two approaches address a fundamental problem that if you are not the source of the data, you are not in control of it. Your reproducibility will be compromised if

- the provider moves the data (e.g. to a different URL)

- the provider changes the format of the data

- the provider amends the values in data, for whatever reason

Thankfully there is a solution, but it's one that only the data providers themselves can implement: the Digital Object Identifier (DOI) system. It's already pretty standard in academic circles, and uses a persistent identifier to provide a citable, recoverable reference to an (immutable?) digital asset that is commonly a paper, software, or a dataset.

And by providing a DOI for your data, you are making it easier for people to cite you.

If your data providers don't already use the DOI system, please encourage them to do so. Finally, the UK government are looking into it so hopefully this should filter through to the ONS at some point.

Third-party organisations such as zenodo can be used to store the content referenced by the DOI.

The future?¶

There's still a problem: you may have a DOI, but what if the data itself gets deleted, lost or hacked (i.e. modified maliciously)?

To me, Blockchain technologies seem to be a perfect solution for reproducible data: a blockchain is an immutable, persistent, distributed database which would be ideal for storing DOIs, and either the data itself (or a cryptographic hash of it, and a URL).

Smart contracts could be used to encode any preprocessing steps you need to do to the raw data, or as an alternative to an API, and your software would need only to execute the contract to get the exact dataset it requires. Immutability is guaranteed, so local cacheing isn't liable to get out of step with the source data. In your published article, you would simply reference the source dataset and the smart contract that you created (or used) to preprocess the data.

A technology like interplanetary file system IPFS could provide the scalability to store the enormous amount of data, potentially making your locally-cached copy of the data part of the database itself.

For data where access is restricted it can of course be encrypted, and homomorphic encryption technologies could permit preprocessing of the data to be done securely, operating directly on the encrypted data, and resulting in encrypted preprocessed output. Thus neither the original content or even the preprocessed data is ever revealed to anyone without the encryption keys. I've put togther a demo of how this could work, using the above dataset: see hedfq.

Finally, to make this work smoothly, some form of incentivisation for participants may be required, perhaps something along the lines of a H-index for datasets and the associated preprocessing "smart contracts"?

But, back in the present, how about a...

Manifesto for data providers¶

All the effort you have expended to make your software reproducible is wasted if you don't apply the same principles to the data it consumes. This can be tricky when the data is from a third party. You could (should?) name-and-shame them in your work, but perhaps more productive would be to encourage them to adopt some or all of these guidelines:

- version your datasets with a DOI

- try to anticipate the (changing) needs of your consumers (ask them!)

- don't use a proprietary data format. Excel should not be the default tool for data science!

- make the raw data easily consumable by code without undue preprocessing (you can always provide a human-friendly visualisation tool as well)

- consider any dataset you publish to be immutable: if it needs corrections or amendments, treat this as a brand new dataset

- don't change the location (i.e. url) of the data without some form of notification

- APIs are better than static links if you have the data to justify it and the resources to implement one

- consult the GO-FAIR community's guidance on data stewardship.